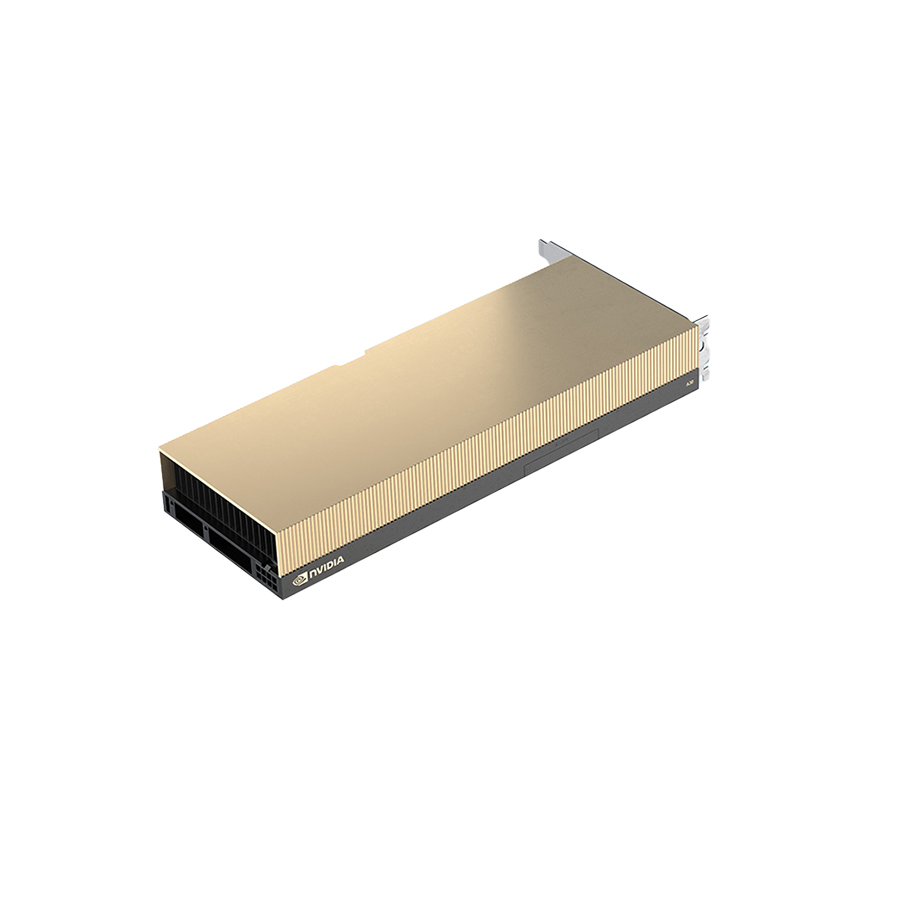

Card màn hình NVIDIA A30 Tensor Core 24GB

116.110.000₫

Giá chưa bao gồm 10% VAT

Thông tin sản phẩm

- GPU Memory: 24GB HBM2

- Memory bandwidth: 933GB/s

- FP64: 5.2 teraFLOPS

- FP32: 10.3 teraFLOPS

- Interface: PCI Express 4.0

- Max Power Consumption: 165W

Thông tin sản phẩm GPU NVIDIA A30 24GB COWOS HBM2 PCIE 4.0

AI Inference and Mainstream Compute for Every Enterprise

Bring accelerated performance to every enterprise workload with NVIDIA A30 Tensor Core GPUs. With NVIDIA Ampere architecture Tensor Cores and Multi-Instance GPU (MIG), it delivers speedups securely across diverse workloads, including AI inference at scale and high-performance computing (HPC) applications. By combining fast memory bandwidth and low-power consumption in a PCIe form factor—optimal for mainstream servers—A30 enables an elastic data center and delivers maximum value for enterprises.

Thông số kỹ thuật GPU NVIDIA A30 24GB COWOS HBM2 PCIE 4.0(Specifications)

| GPU NVIDIA A30 24GB COWOS HBM2 PCIE 4.0 | |

|---|---|

| FP64 | 5.2 teraFLOPS |

| FP64 Tensor Core | 10.3 teraFLOPS |

| FP32 | 10.3 teraFLOPS |

| TF32 Tensor Core | 82 teraFLOPS | 165 teraFLOPS |

| BFLOAT16 Tensor Core | 165 teraFLOPS | 330 teraFLOPS* |

| FP16 Tensor Core | 165 teraFLOPS | 330 teraFLOPS* |

| INT8 Tensor Core | 330 TOPS | 661 TOPS* |

| INT4 Tensor Core | 661 TOPS | 1321 TOPS* |

| Media engines | 1 optical flow accelerator (OFA) 1 JPEG decoder (NVJPEG) 4 video decoders (NVDEC) |

| GPU memory | 24GB HBM2 |

| GPU memory bandwidth | 933GB/s |

| Interconnect | PCIe Gen4: 64GB/s Third-gen NVLINK: 200GB/s |

| Form factor | Dual-slot, full-height, full-length (FHFL) |

| Max thermal design power (TDP) | 165W |

| Multi-Instance GPU (MIG) | 4 GPU instances @ 6GB each 2 GPU instances @ 12GB each 1 GPU instance @ 24GB |

| Virtual GPU (vGPU) software support | NVIDIA AI Enterprise NVIDIA Virtual Compute Server |

| Tên sản phẩm | Card màn hình NVIDIA A30 Tensor Core 24GB HBM2 |

| Peak FP64 | 5.2TF |

| Peak FP64 Tensor Core | 10.3 TF |

| Peak FP32 | 10.3 TF |

| TF32 Tensor Core | 82 TF | 165 TF |

| BFLOAT16 Tensor Core | 165 TF | 330 TF |

| Peak FP16 Tensor Core | 165 TF | 330 TF |

| Peak INT8 Tensor Core | 330 TOPS | 661 TOPS |

| Peak INT4 Tensor Core | 661 TOPS | 1321 TOPS |

| Media engines |

|

| INT8 | INT81(TOPS) | 4x 35.9 | 4x 71.8 |

| GPU Memory | 24GB HBM2 |

| GPU Memory Bandwidth | 933GB/s |

| Interconnect |

|

| Form factor | 2-slot, full height, full length(FHFL) |

| Max thermal design power (TDP) | 165W |

| Multi-Instance GPU (MIG) |

|

| Virtual GPU (vGPU) software support |

|

Đánh giá

There are no reviews yet